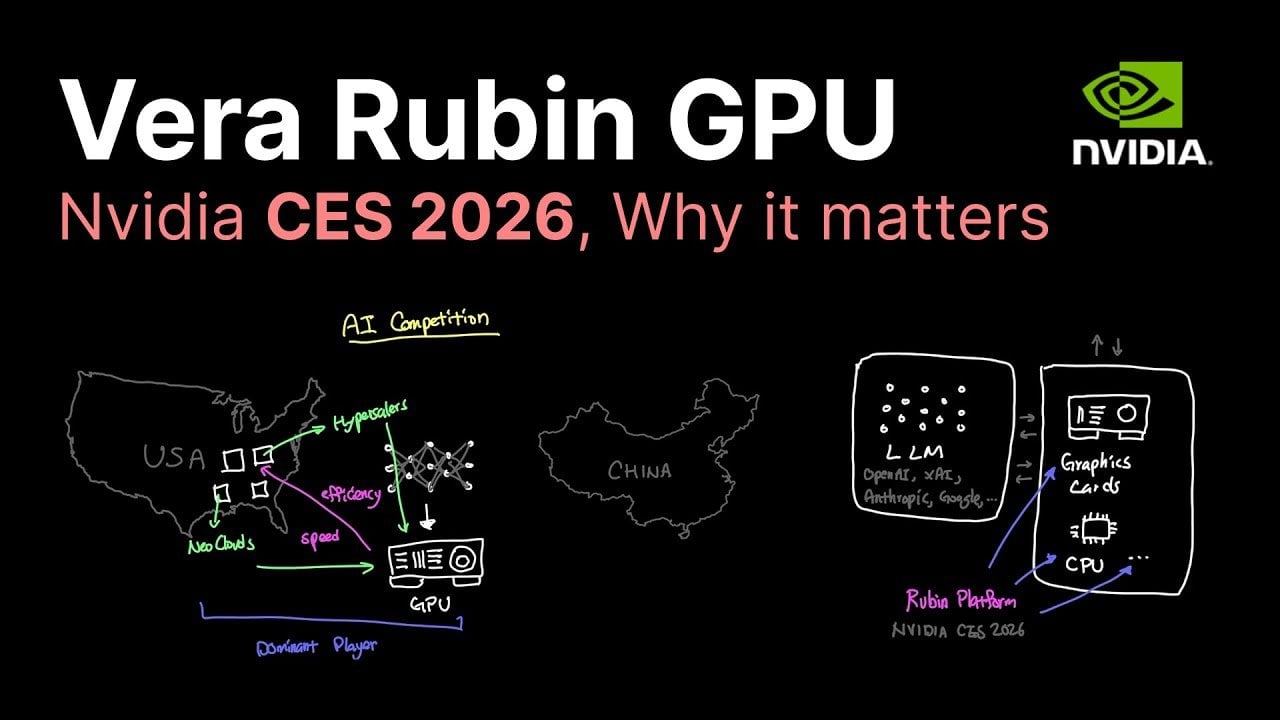

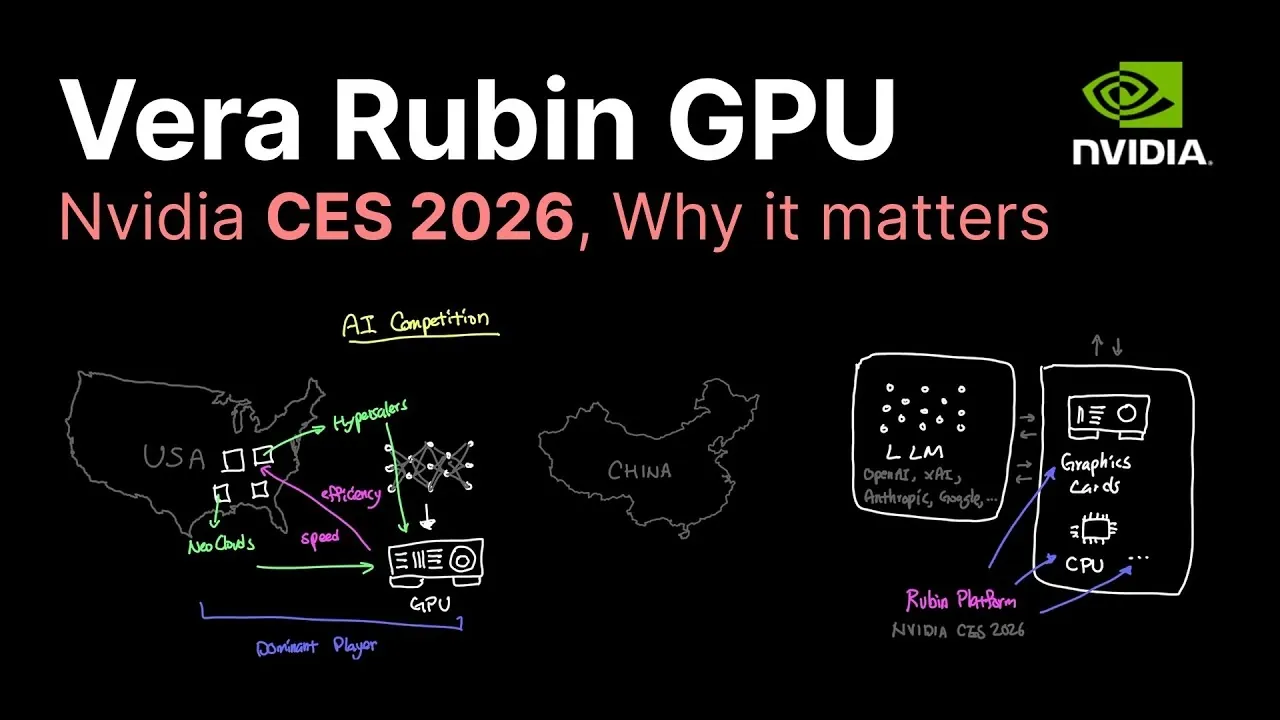

NVIDIA Rubin Platform Adds NVLink 6 at 3.6 TBps & HBM4 with 22 TBps Bandwidth

What if the future of AI hardware wasn’t just about speed, but about reshaping the very foundation of how artificial intelligence operates? At CES 2026, NVIDIA unveiled the Rubin platform, a innovative suite of components designed to meet the growing demands of agentic AI and robotics. In this overview, Caleb Writes Code explains how the Vera Rubin GPU, the platform’s centerpiece, delivers a staggering 35 petaflops for training, 3.5 times faster than its predecessor. This leap in performance isn’t just about numbers; it’s about allowing AI systems to process complex tasks with unprecedented efficiency, from large language models to autonomous robotics. If you’ve ever wondered what it takes to power the next wave of AI breakthroughs, this is the hardware redefining the rules.

But what makes the Rubin platform truly stand out isn’t just its raw power, it’s the seamless integration of six innovative components, each engineered to tackle specific challenges in AI infrastructure. From the NVLink 6 interconnect, which features 3.6 terabytes per second of bandwidth, to the BlueField-4 DPU designed for intricate data handling, NVIDIA has created a system that feels almost futuristic in its ambition. Caleb breaks down how these innovations come together to support real-world applications like retrieval-augmented generation (RAG) and large-scale deployments for hyperscalers. Whether you’re curious about the technical breakthroughs or the broader implications for industries like healthcare and autonomous systems, this guide offers a glimpse into the hardware shaping AI’s next frontier.

NVIDIA Rubin Platform Unveiled at CES 2026

TL;DR Key Takeaways :

- NVIDIA unveiled the Rubin platform at CES 2026, featuring the Vera Rubin GPU and five other advanced components designed to accelerate AI training, inference, and large-scale deployments.

- The platform introduces new technologies like NVLink 6 interconnect (3.6 TB/s bandwidth) and HBM4 memory (22 TB/s bandwidth), allowing exceptional performance for resource-intensive AI tasks such as large language models (LLMs) and retrieval-augmented generation (RAG).

- Rubin is tailored for the shift toward agentic AI and robotics, addressing the growing demand for autonomous systems capable of complex, real-world applications with minimal human intervention.

- Real-world use cases include hyperscale AI deployments, enhanced customer service, healthcare diagnostics, financial analysis, and autonomous vehicles, offering significant advantages in scalability and efficiency.

- The Rubin platform is expected to reshape the AI hardware market, with major players like OpenAI and CoreWeef adopting it in 2026, reinforcing NVIDIA’s leadership in production-ready AI solutions and global competitiveness.

The Rubin platform is a comprehensive and integrated solution, consisting of six innovative components, each tailored to tackle specific challenges in AI infrastructure. These components include:

- Vera Rubin GPU: A high-performance GPU designed to deliver unparalleled speed and efficiency for both AI training and inference.

- VR CPU: A processor optimized to manage complex AI workloads with precision and reliability.

- NVLink 6 Switch: Offering an impressive 3.6 terabytes per second of interconnect bandwidth to ensure seamless data flow between components.

- ConnectX-9 SuperNIC: A next-generation network interface card that enhances connectivity with high-speed data transfer capabilities.

- BlueField-4 DPU: A data processing unit designed to accelerate data handling for intricate AI tasks.

- Spectrum-6 Ethernet Switch: An advanced Ethernet switch that supports hyperscale AI deployments with robust connectivity solutions.

Together, these components form a unified platform capable of handling AI inference, training, and deployment at unprecedented scales. By integrating these technologies, NVIDIA has created a system that not only meets but exceeds the demands of modern AI applications.

Addressing the Shift in AI Trends

The AI industry is undergoing a significant transformation, moving beyond the era of generative AI to embrace agentic AI and robotics. This shift reflects the increasing demand for systems capable of autonomous operation, environmental interaction, and executing complex tasks with minimal human intervention. NVIDIA’s Rubin platform is specifically designed to meet these evolving needs, offering hardware that can handle the computational intensity required by agentic AI and robotics applications.

In addition to supporting these advanced AI paradigms, the Rubin platform emphasizes faster training and inference, aligning with the industry’s growing focus on production-ready AI. As organizations prioritize real-world applications, the need for scalable and efficient hardware has become more critical than ever. NVIDIA’s Rubin platform addresses this gap, allowing businesses to deploy AI models more quickly and effectively across a wide range of industries, from healthcare to autonomous systems.

NVIDIA Vera Rubin GPU : CES 2026

Take a look at other insightful guides from our broad collection that might capture your interest in NVIDIA.

- NVIDIA Nemotron 70b: A Breakthrough in Open-Source AI

- New NVIDIA humanoid robots unveiled at GTC 2024

- NVIDIA Buys Groq : Licensing Targets Cheaper Inference

- NVIDIA Releases Open Source Nitrogen, Aimed Beyond Games

- NVIDIA Groq Licensing Explained, $20B Deal Reshapes AI Chips

- Microsoft, Anthropic, and NVIDIA Partner on Enterprise AI

- NVIDIA NitroGen AI Used 40,000 Hours of Gameplay Video for

- Inside NVIDIA HQ the offices of a $2 Trillion company explored

- NVIDIA TITAN RTX 24GB graphics card unveiled for $2,499

- How the NVIDIA DGX Spark Redefines Local AI Computing Power

Performance Breakthroughs and Technical Innovations

The Rubin platform introduces several technical breakthroughs that set new benchmarks for AI hardware performance. The Vera Rubin GPU is a standout component, delivering 35 petaflops for training, 3.5 times faster than its predecessor, the Blackwell chip, and 50 petaflops for inference. This performance leap ensures exceptional speed and efficiency, even for the most demanding AI workloads.

Complementing the GPU is the NVLink 6 interconnect, which provides a remarkable 3.6 terabytes per second of bandwidth, allowing faster communication between components. Additionally, the platform incorporates HBM4 memory, offering an unprecedented 22 terabytes per second of bandwidth. These innovations are critical for supporting resource-intensive applications such as large language models (LLMs), retrieval-augmented generation (RAG), and other high-performance AI tasks. By reducing latency and increasing throughput, the Rubin platform ensures optimal performance, even under heavy computational loads.

Real-World Applications and Use Cases

The NVIDIA Rubin platform is designed to power a diverse array of AI applications, ranging from large-scale LLMs to robotics and agentic AI. Its advanced hardware capabilities make it particularly effective for retrieval-augmented generation (RAG), a technique that combines LLMs with external knowledge bases to enhance accuracy and relevance. This capability is especially valuable for industries that rely on precise and context-aware AI systems, such as customer service, healthcare diagnostics, and financial analysis.

For hyperscalers and NeoClouds, the Rubin platform offers significant advantages in terms of service-level agreements (SLAs) and token efficiency. By optimizing hardware performance, NVIDIA enables these organizations to deliver faster, more reliable AI services. This is increasingly critical as AI becomes deeply integrated into everyday technologies, from virtual assistants to autonomous vehicles. The Rubin platform’s ability to handle large-scale deployments ensures that businesses can meet the growing demand for AI-driven solutions without compromising on performance or reliability.

Global Impact and Market Implications

The Rubin platform is poised to have a profound impact on the global AI landscape, strengthening the United States’ position in the ongoing competition with other nations, particularly China. By prioritizing faster and more efficient AI hardware, NVIDIA enables organizations to deploy AI solutions at scale, unlocking substantial economic and technological value.

Major players in the AI industry, including OpenAI and CoreWeef, are expected to adopt the Rubin platform in the latter half of 2026. This anticipated adoption underscores the platform’s potential to reshape the AI hardware market, driving innovation and setting new performance benchmarks. NVIDIA’s focus on inference hardware reflects a broader industry trend toward practical, production-ready AI applications, further solidifying its leadership in the field.

As the AI industry continues to evolve, the Rubin platform is set to play a pivotal role in shaping the future of AI infrastructure. Its advanced capabilities and scalable design make it a cornerstone for the next generation of AI technologies, making sure that businesses and researchers alike can push the boundaries of what is possible with artificial intelligence.

Media Credit: Caleb Writes Code

Filed Under: AI, Technology News, Top News

Latest Geeky Gadgets Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, Geeky Gadgets may earn an affiliate commission. Learn about our Disclosure Policy.