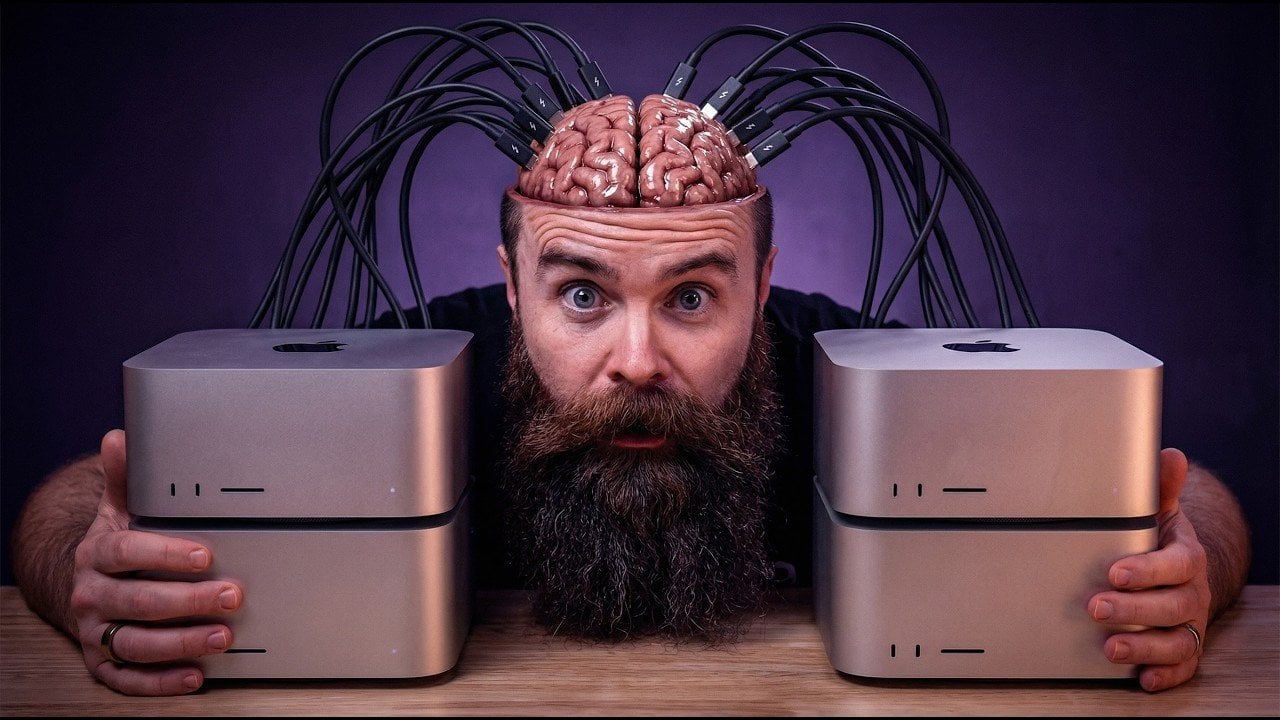

Powerful Apple Mac Studio AI Supercomputer with 2TB of RAM

What if you could build a machine so powerful it could handle trillion-parameter AI models, yet so accessible it could sit right in your home office? In the video, NetworkChuck breaks down how he constructed a local AI supercomputer with a staggering 2TB of RAM, using nothing more than four Mac Studios and some clever optimizations. This isn’t just a tech flex, it’s a bold challenge to the notion that high-performance AI computing is reserved for massive corporations with endless budgets. By combining consumer-grade hardware with innovative techniques like tensor parallelism and RDMA, he’s crafted a system that rivals traditional supercomputers at a fraction of the cost.

This guide will walk you through the key takeaways from his build, including the hardware configuration, the software breakthroughs that slashed latency, and the real-world applications that make this setup more than just a theoretical exercise. Whether you’re curious about how local AI computing can enhance data security or intrigued by the idea of running trillion-parameter models without relying on the cloud, there’s plenty to unpack here. What does it mean when consumer hardware starts to close the gap with enterprise systems? Let’s explore the possibilities, and the challenges, of this new project.

Building a $50K Mac Studio AI Supercomputer

TL;DR Key Takeaways :

- By clustering four Mac Studios with 512GB of unified memory each, a 2TB RAM AI supercomputer can be built for $50,000, offering a cost-effective alternative to traditional systems like Nvidia H100 clusters.

- Key innovations include macOS Tahoe 26.2 with RDMA support, reducing latency from 300 to 3 microseconds, and tensor parallelism, which maximizes the utilization of 320 GPU cores for efficient AI model processing.

- The system successfully handled trillion-parameter models like Kimmy K2 and tested AI models such as Llama 3 and DeepSeek, demonstrating its capability for real-world applications with enhanced data security and lower operational costs.

- Challenges included occasional system crashes with macOS Tahoe 26.2 and Thunderbolt bridge constraints, highlighting areas for future improvement in software stability and network monitoring.

- This project showcases the potential of consumer-grade hardware to rival traditional supercomputers, providing widespread access to access to high-performance AI computing for researchers, developers, and small organizations.

Hardware Configuration: The Foundation of Performance

The hardware setup forms the backbone of this AI supercomputer. Each Mac Studio in the cluster is equipped with:

- 512GB of unified memory, making sure seamless data sharing between the CPU and GPU.

- 80 GPU cores, providing robust parallel processing power for AI tasks.

- 8TB of storage, offering ample space for datasets and model files.

When combined, the cluster delivers:

- 320 GPU cores, allowing high-speed computation for complex AI models.

- 32TB of storage, sufficient for handling large-scale AI projects.

The system relies on Thunderbolt 5 and Ethernet networking to ensure fast and reliable communication between devices. At an estimated cost of $50,000, this configuration provides a cost-effective alternative to traditional high-performance computing systems, such as Nvidia H100 clusters, which can exceed $780,000. This affordability makes innovative AI computing accessible to smaller organizations and independent researchers.

Overcoming Latency with RDMA and macOS Tahoe 26.2

One of the most significant challenges in clustering Mac Studios was addressing latency. Initial attempts faced delays of up to 300 microseconds, causing performance drops of up to 91%. This issue was resolved with the introduction of macOS Tahoe 26.2, which includes support for Remote Direct Memory Access (RDMA). RDMA reduces latency to just 3 microseconds, allowing faster communication between GPUs and significantly improving the cluster’s overall efficiency.

The integration of RDMA allows data to bypass the CPU during transfers, directly accessing memory across devices. This innovation ensures that the cluster operates at peak performance, making it capable of handling demanding AI workloads with minimal delays.

Mac Studio Cluster Hits 3x Speed Boost with RDMA on Tahoe 26.2

Discover other guides from our vast content that could be of interest on AI Supercomputer.

- NVIDIA DGX Spark AI Supercomputer : Local AI Model Performance

- How to Build an AI Supercomputer Using 5 Mac Studios

- MSI Expert Edge: Mini Supercomputer for AI Development

- NVIDIA DGX Spark : Compact AI Mini Supercomputer Unboxing

- $100 Billion Stargate AI Supercomputer built by OpenAI & Microsoft

- NVIDIA DGX Spark Compact Supercomputer AI Developers Need

- Aurora Supercomputer Ranks Fastest for AI

- World’s fastest AI chip features 900,000 AI cores

- NVIDIA Jetson TX1 Mini Supercomputer Designed To Bring Artificial

Optimizing Performance with Tensor Parallelism

To further enhance the cluster’s capabilities, the system transitioned from pipeline parallelism to tensor parallelism. This approach divides large AI models into smaller tensors, which are processed simultaneously across multiple GPUs. Tensor parallelism maximizes the utilization of the cluster’s 320 GPU cores, making sure efficient distribution of computational tasks.

When combined with RDMA, tensor parallelism tripled the system’s performance compared to earlier configurations. The cluster successfully managed trillion-parameter models, such as Kimmy K2, showcasing its ability to handle some of the most complex AI models available today. This optimization highlights the potential of consumer-grade hardware to rival traditional supercomputers in specific applications.

Testing and Real-World Applications

The cluster underwent rigorous testing with a variety of AI models, including:

- Llama 3, a state-of-the-art natural language processing model.

- DeepSeek, designed for advanced data analysis and pattern recognition.

- Kimmy K2, a trillion-parameter model used for large-scale AI research.

These tests confirmed the system’s compatibility with real-world applications such as Open Web UI and Xcode. Running these models locally offers several advantages, including enhanced data security by reducing reliance on cloud-based solutions and lower operational costs by eliminating recurring cloud service fees. This capability is particularly valuable for organizations that handle sensitive data or operate on tight budgets.

Affordability and Accessibility

At a price point of $50,000, this AI supercomputer represents a significant step toward providing widespread access to access to high-performance AI computing. It provides researchers, developers, and small organizations with the tools needed to innovate in fields such as machine learning, application development, and scientific research. By bridging the gap between consumer-grade hardware and enterprise-level capabilities, this project opens new doors for experimentation and discovery.

Challenges and Areas for Improvement

Despite its impressive achievements, the project encountered several challenges that highlight areas for future improvement:

- The beta version of macOS Tahoe 26.2 occasionally caused system crashes, indicating the need for further software refinement to ensure stability.

- Thunderbolt bridge constraints limited the ability to monitor network traffic effectively, complicating the diagnosis of performance bottlenecks.

These issues underscore the importance of continued development in both hardware and software to fully realize the potential of local AI clustering.

Exploring the Future of Local AI Clustering

This project serves as a compelling proof of concept for the viability of local AI clustering using consumer-grade hardware. By addressing current limitations and using ongoing advancements in networking and software, you can unlock new possibilities in high-performance computing. As technology continues to evolve, local AI clusters have the potential to rival traditional supercomputers, offering scalable and accessible solutions for a wide range of applications, from academic research to industrial innovation.

The development of this AI supercomputer demonstrates how innovative hardware and optimized software can deliver exceptional performance at a fraction of the cost of traditional systems. This achievement not only highlights the feasibility of local AI computing but also encourages further exploration into clustering technologies and their practical applications.

Media Credit: NetworkChuck

Filed Under: AI, Hardware, Top News

Latest Geeky Gadgets Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, Geeky Gadgets may earn an affiliate commission. Learn about our Disclosure Policy.