NotebookLM Upgrade Turns Research Hours into Minutes

What if you could condense 10 hours of painstaking research into just a few minutes? That’s exactly the kind of fantastic efficiency NotebookLM promises. Universe of AI walks through how this innovative platform is reshaping the research process, turning traditionally time-consuming tasks into seamless, automated workflows. Imagine effortlessly generating polished overviews, visually stunning infographics, or concise slide decks, all tailored to your specific needs. With its ability to synthesize complex data into actionable insights, NotebookLM isn’t just another productivity upgrade; it’s a bold redefinition of how we approach information.

In this update overview, we’ll explore how NotebookLM’s unique blend of automation and customization enables users to tackle even the most demanding projects with ease. Whether you’re a student juggling deadlines, a professional preparing for a high-stakes presentation, or a content creator striving for efficiency, there’s something here for everyone. You’ll discover how this platform goes beyond basic functionality, offering structured outputs that save time without sacrificing quality. Could this be the future of research? Let’s unpack the possibilities and see how it might transform the way you work.

Streamlined Research with NotebookLM

TL;DR Key Takeaways :

- NotebookLM is a innovative research platform designed to automate time-intensive tasks, allowing users to create tailored outputs like research briefs, overviews, slide decks, and infographics efficiently.

- The platform streamlines the research process by identifying, evaluating, and synthesizing data, offering customizable templates and structured outputs to suit diverse needs.

- NotebookLM emphasizes customization, allowing users to adjust structure, tone, style, and detail, making it ideal for applications like study guides, competitive analyses, and professional presentations.

- It maximizes productivity by transforming unstructured data into actionable insights quickly, maintaining high standards of quality and accuracy throughout the process.

- NotebookLM serves a wide audience, including academics, professionals, and content creators, by functioning as a comprehensive research agent rather than a conventional chatbot, focusing on delivering actionable and polished results.

Core Capabilities of NotebookLM

NotebookLM is purpose-built to tackle the most labor-intensive aspects of research. Instead of manually sifting through vast amounts of information, the platform identifies, evaluates, and synthesizes relevant data on your behalf. Whether your goal is to create a detailed overview, an engaging infographic, or a concise slide deck, NotebookLM transforms raw data into polished, structured outputs. Its advanced algorithms ensure accuracy, while its customizable source selection allows you to maintain relevance and precision in your work. This combination of automation and adaptability makes it an invaluable tool for anyone seeking to streamline their research process.

How NotebookLM Works: Features and Workflow

NotebookLM offers a streamlined yet powerful workflow that simplifies the research process while saving you significant time and effort. The platform operates through a series of intuitive steps:

- Create dedicated notebooks to organize your projects and keep your work structured.

- Upload your own sources or search for relevant materials directly within the platform.

- Generate research briefs that summarize key insights from multiple sources, providing a clear and concise overview.

- Use customizable templates to produce overviews, slide decks, or infographics tailored to your specific objectives.

This efficient process eliminates hours of manual work, allowing you to focus on higher-level tasks such as analysis and decision-making. Whether you’re preparing for a critical presentation or drafting a strategic document, NotebookLM adapts seamlessly to your unique requirements.

NotebookLM Just Got a Surprise Upgrade

Take a look at other insightful guides from our broad collection that might capture your interest in NotebookLM.

- NotebookLM Update Expands Prompt Limit to 10,000 Characters

- NotebookLM Alternative Open Notebook Lets You Build a Private AI

- MS Copilot Notebooks vs Google NotebookLM : Which is Better

- 12 NotebookLM Use Cases to Boost Productivity and Creativity

- How NotebookLM Uses Gemini 3 to Build Decks, Visuals & Data

- NotebookLM Upgrade Speeds up Slides, Scripts & Content Writing

- How NotebookLM’s AI Quizzes and Tools Boost Learning Efficiency

- NotebookLM Guide for Fast, Professional Infographics Creation

- Google NotebookLM Deep Research, AI Research Assistant Update

- Create Professional Presentations in Minutes with NotebookLM AI

Customization: Meeting Diverse Needs

One of NotebookLM’s most compelling features is its flexibility, which allows you to tailor outputs to suit your specific goals. The platform enables you to adjust the structure, tone, style, and level of detail in your deliverables, making it suitable for a wide range of applications. Some of the key use cases include:

- Developing comprehensive study guides or educational materials.

- Conducting in-depth competitive analyses for business or market research.

- Designing professional-grade slide decks or visually engaging infographics.

Additionally, NotebookLM offers various visual formatting options, allowing you to choose styles and orientations that best align with your audience’s preferences. This ensures that your findings are not only accurate but also presented in a polished and professional manner.

Efficiency: Maximizing Productivity Without Compromising Quality

NotebookLM is engineered to enhance productivity by automating the transformation of unstructured data into actionable outputs. Tasks that traditionally required hours of manual effort can now be completed in a fraction of the time. This efficiency allows you to dedicate more energy to interpreting results, making strategic decisions, or refining your final outputs. Despite its speed, the platform maintains a strong focus on quality and accuracy, making sure that your work meets the highest standards.

Who Benefits Most from NotebookLM?

The versatility of NotebookLM makes it a valuable resource across a wide range of fields and professions.

- Academics: Effortlessly compile research papers, literature reviews, or study guides, saving time for deeper analysis.

- Professionals: Prepare for meetings, presentations, or strategic planning sessions with well-organized and visually appealing materials.

- Content Creators: Generate polished blog posts, infographics, or slide decks with minimal effort, enhancing the quality of your content.

Regardless of your role, NotebookLM adapts to your specific needs, allowing you to work more efficiently and achieve better results in less time.

What Distinguishes NotebookLM?

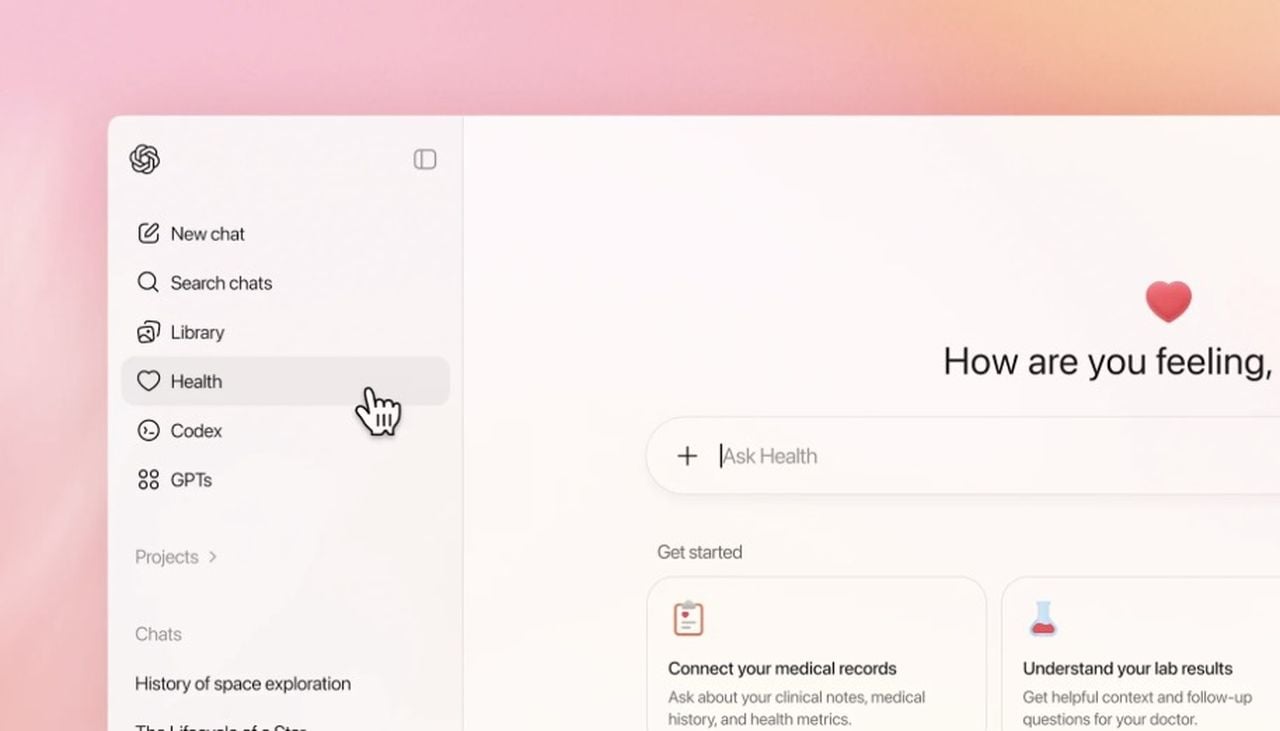

Unlike traditional research tools or chatbots, NotebookLM functions as a comprehensive research agent. Its capabilities extend beyond simply answering questions, focusing instead on delivering structured, actionable results. By automating labor-intensive tasks such as data synthesis and formatting, the platform enables you to concentrate on deriving insights and creating impactful outputs. This unique approach sets NotebookLM apart, making it an indispensable tool for anyone who values precision, efficiency, and high-quality results.

Elevating Research to New Heights

NotebookLM represents a significant advancement in the way research is conducted. By combining automation, customization, and a focus on actionable results, it caters to a wide array of needs while maintaining a commitment to accuracy and relevance. Whether you’re a student aiming to streamline your studies, a professional seeking to optimize your workflow, or a content creator looking to enhance your output, NotebookLM offers a powerful solution to help you achieve your goals with ease and efficiency.

Media Credit: Universe of AI

Latest Geeky Gadgets Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, Geeky Gadgets may earn an affiliate commission. Learn about our Disclosure Policy.